Until recently, the process of defining the needs of a computer facility was quite clear. In the last twenty years, the characteristics of the data centers have not changed significantly and the configuration of the data centers has been clear to both the customers and the engineering consultants.

The advent of the world toward AI applications is changing engineering dramatically. Conventions that were the cornerstones of design are changing. Power density, cooling methods, and system behavior are changing the “rules of the game” and data centers are changing beyond recognition. The boundary between IT equipment and facility infrastructure is blurred, and it presents new challenges to all partners.

On January 20, 2025, the SEEEI – The Society of Electrical and Electronics Engineers in Israel launched a new forum – the Data Center Forum.

On this opportunity, I presented the challenges we face in data center design in the AI era.

The design of data centers from the 1990’s to the present time was based on similar common assumptions, which included, among others:

- Electricity consumption was stable and constant, and each server was fed from two sources.

- The cooling system of the computers was based on air-cooling of the data hall or pod.

- The average power consumption was 6 KW to 15 KW per rack depending on the characteristics of the client and the application.

- The boundary between the IT and the infrastructure was in the rack itself, which was located in an air-cooled data center and to which the electrical connections were supplied.

The design of the data centers was based on clear standards that defined both redundancy and availability (such as Uptime Institute’s standards) and engineering parameters such as ASHRAE’s standards.

In the “Old World” there were a number of exceptions such as mainframe computers and supercomputing (HPC), these technologies were anomalies in the “landscape” of the data centers and their installation always required dedicated infrastructure planning.

DLC by cold plate

IBM 3090-400

1988

The AI era brings with it new computers with distinctive characteristics in energy behavior and consumption. The change is due to an extreme increase in the power consumption of the processors themselves and an increase in the number of processors. From an average power of 6-15 KW per rack the power increases to130-150 KW per AI rack. This change does not allow us anymore to use only air cooling and requires a fundamental change in the cooling method. Cooling of this power density requires direct cooling of the server, whether by direct cooling of the processor or by immersion cooling.

This change requires different infrastructure planning, and with it raises a number of operational issues that did not exist while using air cooling. A fundamental issue is what is the border between IT and the facility’s infrastructure. In the era of air cooling, redundancy was achieved by installing additional air cooling units (CRAHs or In-Rows) that provided backup in the event of a cooling unit failure. In the case of direct liquid cooling (DLC), Single Points of Failure (SPOFs) appears and might cause the IT system to fail in extreme situations such as a pipe leakage or malfunction. Solutions of fault tolerant systems that were possible in air cooling are not applicable anymore due to the power density and the temperature increase rate.

Direct liquid cooling makes it possible to operate at a higher water supply temperature than today’s temperatures. it is likely that future installations will have two water cooling systems, one – similar to today’s air cooling system and the second for DLC

power supply to HPC for AI presents challenges in power supply too.

AI rack (of some manufacturers) operate in 2 out of 3 sources topology. The topology of two power supplies is not fully valid anymore and requires a different design topology of facilities. The mixing of AI based equipment with old fashion equipment may change the energy balance between the power streams and necessitate the construction of facilities with a unique configuration.

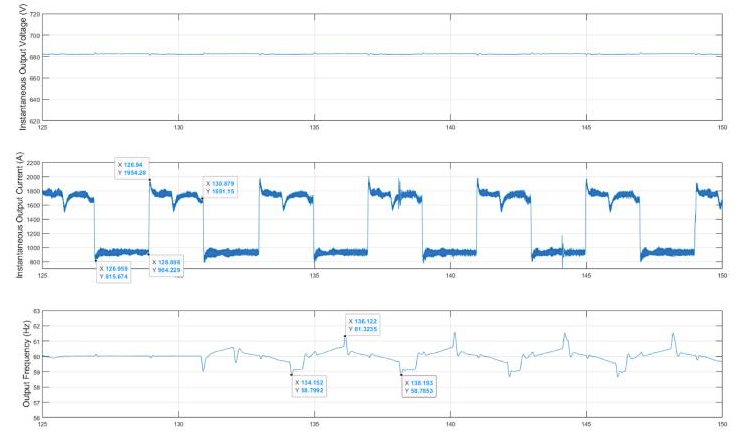

Furthermore, if in the past the power consumption profile of a computer installation and data center was constant and stable, today the power consumption profile changes and becomes “spiky” as the current consumption rises and drops rapidly. This phenomenon may affect the harmonies, the UPS systems, and the ability of the generators to back up the equipment in general, and when the UPS transfers to bypass in particular.

Spiky load profile

Evaluating the performance of Vertiv™ large UPS systems with AI workloads

The SLA that has developed over the years between vendors and customers will need to be changed. The boundaries of responsibility between facility and IT will change and will be redefined.

preparing for the AI era, manufacturers of electromechanical systems are developing new systems that are designed to provide solutions to the new characteristics of the AI, such as UPS systems designed to cope with the spiky loads and chillers designed for higher temperatures. These systems are in various stages of development and supply.

It is important to remember that not all data centers and IT equipment will be HPC and for AI applications, there will still be many facilities who will continue to demand infrastructures with similar characteristics to the existing ones.

The near future will be challenging for customers, suppliers and the design team: rapid growth, new features, up-to-date standardization and various infrastructures. In the engineering field, it will be necessary to think out of the box and re-examine paradigms that were cornerstones of the old design. Only creative thinking can assure our customers that the future infrastructures will provide an appropriate response to the changing technological needs.